寫在前面

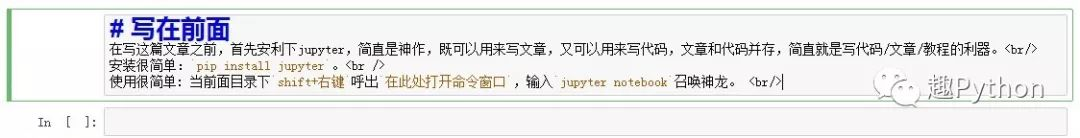

在寫這篇文章之前,首先安利下jupyter,簡直是神作,既可以用來寫文章,又可以用來寫代碼,文章和代碼並存,簡直就是寫代碼/文章/教程的利器。

安裝很簡單: pip install jupyter

使用很簡單: 當前面目錄下 shift+右鍵 呼出 在此處打開命令窗口 ,輸入 jupyter notebook 召喚神龍。

上面這段文字在jupyter中是這樣的(markdown格式):

本文介紹

基於iris數據集進行數據分析。

iris數據集是常用的分類實驗數據集,由Fisher,1936收集整理。iris也稱鳶尾花卉數據集,是一類多重變量分析的數據集。數據集包含150個數據樣本,分為3類,每類50個數據,每個數據包含4個屬性。可通過花萼長度,花萼寬度,花瓣長度,花瓣寬度4個屬性預測鳶尾花卉屬於(Setosa,Versicolour,Virginica)三個種類中的哪一類。(來自百度百科)

數據預處理

首先使用padas相關的庫進行數據讀取,處理和預分析。

pandas的可視化user guide參見:

https://pandas.pydata.org/pandas-docs/stable/user_guide/visualization.html

首先讀取信息,並查看數據的基本信息:可以看到數據的字段,數量,數據類型和大小。

%matplotlib notebook import pandas as pd import matplotlib.pyplot as plt # 讀取數據 iris = pd.read_csv('iris.data.csv')

iris.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 150 entries, 0 to 149

Data columns (total 5 columns):

Sepal.Length 150 non-null float64

Sepal.Width 150 non-null float64

Petal.Length 150 non-null float64

Petal.Width 150 non-null float64

type 150 non-null object

dtypes: float64(4), object(1)

memory usage: 5.9+ KB

[/code]

```code

# 前5個數據 iris.head()

| Sepal.Length | Sepal.Width | Petal.Length | Petal.Width | type |

|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 |

# 數據描述 iris.describe()

| Sepal.Length | Sepal.Width | Petal.Length | Petal.Width |

|---|---|---|---|

| count | 150.000000 | 150.000000 | 150.000000 |

| mean | 5.843333 | 3.054000 | 3.758667 |

| std | 0.828066 | 0.433594 | 1.764420 |

| min | 4.300000 | 2.000000 | 1.000000 |

| 25% | 5.100000 | 2.800000 | 1.600000 |

| 50% | 5.800000 | 3.000000 | 4.350000 |

| 75% | 6.400000 | 3.300000 | 5.100000 |

| max | 7.900000 | 4.400000 | 6.900000 |

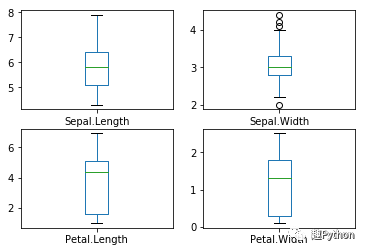

箱線圖描述了數據的分布情況,包括:上下界,上下四分位數和中位數,可以簡單的查看數據的分布情況。

比如:上下四分位數相隔較遠的話,一般可以很容易分為2類。

在《深入淺出統計分析》一書中,一個平均年齡17歲的游泳班,可能是父母帶着嬰兒的早教班,這種情況在箱線圖上就能夠清楚的反映出來。

# 箱線圖 iris.plot(kind='box', subplots=True, layout=(2,2), sharex=False, sharey=False)

Sepal.Length AxesSubplot(0.125,0.536818;0.352273x0.343182)

Sepal.Width AxesSubplot(0.547727,0.536818;0.352273x0.343182)

Petal.Length AxesSubplot(0.125,0.125;0.352273x0.343182)

Petal.Width AxesSubplot(0.547727,0.125;0.352273x0.343182)

dtype: object

[/code]

```code

#直方圖,反饋的是數據的頻度,一般常見的是高斯分布(正態分布)。 iris.hist()

array([[<matplotlib.axes._subplots.AxesSubplot object at 0x000000001418E7B8>,

<matplotlib.axes._subplots.AxesSubplot object at 0x00000000141C3208>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x00000000141EB470>,

<matplotlib.axes._subplots.AxesSubplot object at 0x00000000142146D8>]],

dtype=object)

[/code]

```code

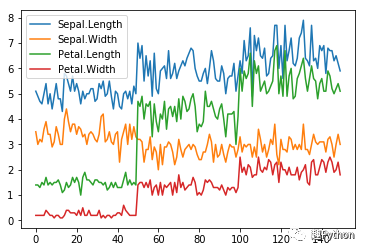

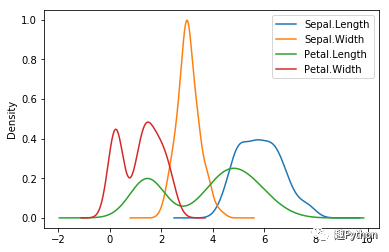

# plot直接展示數據的分布情況,kde核密度估計對比直方圖來看 iris.plot()

iris.plot(kind = 'kde')

<matplotlib.axes._subplots.AxesSubplot at 0x14395518>

[/code]

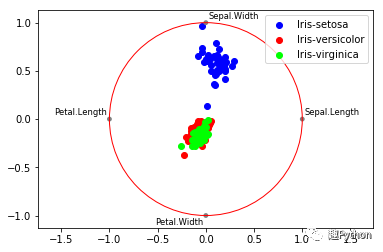

徑向可視化是多維數據降維的可視化方法,不管是數據分析還是機器學習,降維是最基礎的方法之一,通過降維,可以有效的減少復雜度。

徑向坐標可視化是基於彈簧張力最小化算法。

它把數據集的特征映射成二維目標空間單位圓中的一個點,點的位置由系在點上的特征決定。把實例投入圓的中心,特征會朝圓中此實例位置(實例對應的歸一化數值)“拉”實例。

```code

ax = pd.plotting.radviz(iris, 'type', colormap = 'brg') # radviz的源碼中Circle未設置edgecolor,畫圓需要自己處理 ax.add_artist(plt.Circle((0,0), 1, color='r', fill = False))

<matplotlib.patches.Circle at 0x1e68ba58>

[/code]

```code

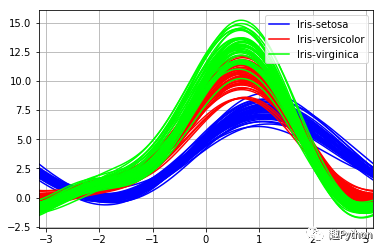

# Andrews曲線將每個樣本的屬性值轉化為傅里葉序列的系數來創建曲線。 # 通過將每一類曲線標成不同顏色可以可視化聚類數據, # 屬於相同類別的樣本的曲線通常更加接近並構成了更大的結構。 pd.plotting.andrews_curves(iris, 'type', colormap='brg')

<matplotlib.axes._subplots.AxesSubplot at 0x1e68b978>

[/code]

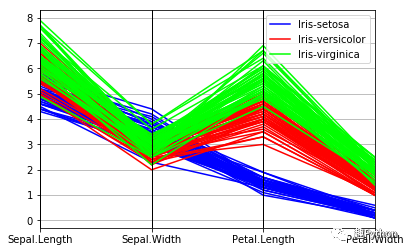

```code

# 平行坐標可以看到數據中的類別以及從視覺上估計其他的統計量。 # 使用平行坐標時,每個點用線段聯接,每個垂直的線代表一個屬性, # 一組聯接的線段表示一個數據點。可能是一類的數據點會更加接近。 pd.plotting.parallel_coordinates(iris, 'type', colormap = 'brg')

<matplotlib.axes._subplots.AxesSubplot at 0x1e931160>

[/code]

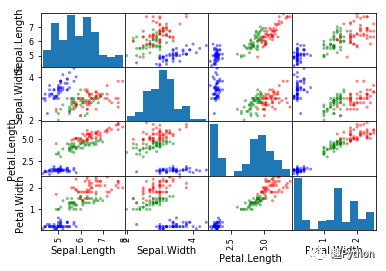

```code

# scatter matrix colors = {'Iris-setosa': 'blue', 'Iris-versicolor': 'green', 'Iris-virginica': 'red'}

pd.plotting.scatter_matrix(iris, color = [colors[type] for type in iris['type']])

array([[<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EB1B6A0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x0000000014349860>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000000001C67D550>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000000001434D198>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EB3C6A0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EB6BC18>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EB9C198>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EBC3748>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EBC3780>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EE0C240>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EE357B8>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EE5CD30>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EE8E2E8>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EEB4860>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EEDCDD8>,

<matplotlib.axes._subplots.AxesSubplot object at 0x000000001EF0D390>]],

dtype=object)

[/code]

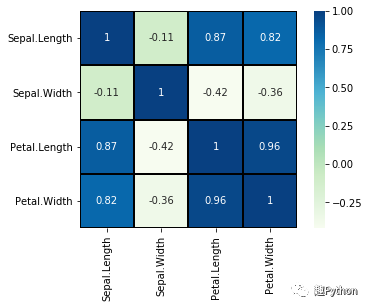

```code

# 相關系數的熱力圖 import seaborn as sea

sea.heatmap(iris.corr(), annot=True, cmap='GnBu', linewidths=1, linecolor='k',square=True)

<matplotlib.axes._subplots.AxesSubplot at 0x1f56ce10>

[/code]

```code

# pandas_profiling這個庫可以對數據集進行初步預覽,並進行報告,很不錯,安裝方式 pip install pandas_profiling # 運行略 # import pandas_profiling as pp # pp.ProfileReport(iris)

# 數據分類 import sklearn as sk from sklearn import preprocessing from sklearn import model_selection

# 預處理 X = iris[['Sepal.Length', 'Sepal.Width', 'Petal.Length', 'Petal.Width']] y = iris['type'] encoder = preprocessing.LabelEncoder() y = encoder.fit_transform(y)

print(y)

[0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2]

[/code]

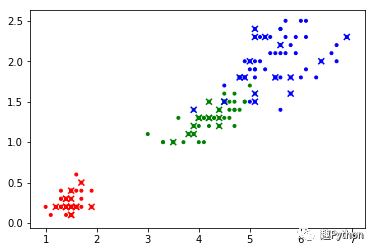

```code

from sklearn import metrics def model_fit_show(model, model_name, X, y, test_size = 0.3, cluster = False):

` train_X, test_X, train_y, test_y = model_selection.train_test_split(X, y,

test_size = 0.3)

print(train_X.shape, test_X.shape, train_y.shape, test_y.shape)

model.fit(train_X, train_y)

prediction = model.predict(test_X) `

print(prediction) if not cluster:

` print('accuracy of {} is: {}'.format(model_name,

metrics.accuracy_score(prediction, test_y)))

pre = model.predict([[4.7, 3.2, 1.3, 0.2]])

print(pre)

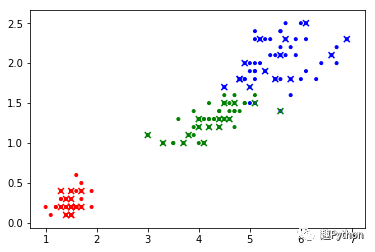

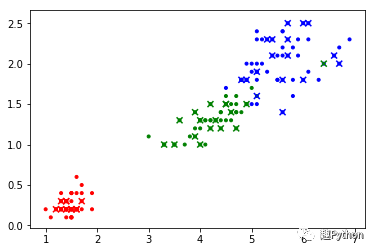

L1 = X['Petal.Length'].values

L2 = X['Petal.Width'].values

cc = 50['r'] + 50['g'] + 50*['b']

plt.scatter(L1, L2, c = cc, marker = '.')

L1 = test_X['Petal.Length'].values

L2 = test_X['Petal.Width'].values

MAP = {0:'r', 1:'g', 2:'b', 3:'y', 4:'k', 5:'w', 6:'m', 7:'c'}

cc = [MAP[_] for _ in prediction]

plt.scatter(L1, L2, c = cc, marker = 's' if cluster else 'x')

plt.show() `

# logistic from sklearn import linear_model

model_fit_show(linear_model.LogisticRegression(), 'LogisticRegression', X, y)

(105, 4) (45, 4) (105,) (45,)

[1 2 2 0 2 0 2 1 0 1 2 1 0 0 0 2 2 1 2 0 2 0 1 2 2 1 0 1 2 1 2 1 0 2 0 1 0

1 0 0 1 2 2 0 0]

accuracy of LogisticRegression is: 0.9111111111111111

[0]

[/code]

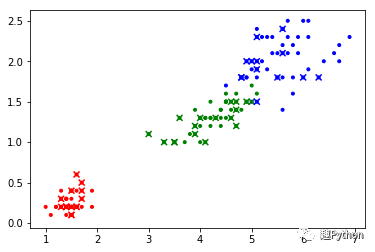

```code

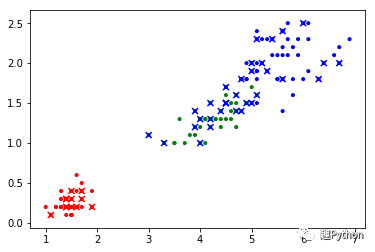

# tree from sklearn import tree

model_fit_show(tree.DecisionTreeClassifier(), 'DecisionTreeClassifier', X, y)

(105, 4) (45, 4) (105,) (45,)

[2 0 1 0 0 0 1 2 0 0 1 0 2 0 2 1 1 0 2 0 2 0 0 1 1 2 0 2 0 1 2 1 1 1 1 2 1

1 2 1 1 2 2 2 0]

accuracy of DecisionTreeClassifier is: 0.9111111111111111

[0]

[/code]

```code

#SVM from sklearn import svm

model_fit_show(svm.SVC(), 'svm.svc', X, y)

(105, 4) (45, 4) (105,) (45,)

[2 0 0 2 0 1 0 2 1 2 2 0 1 1 0 1 1 2 1 0 2 2 2 1 0 2 2 1 1 1 0 1 0 0 2 0 0

2 0 0 1 2 1 0 0]

accuracy of svm.svc is: 0.9111111111111111

[0]

py:193: FutureWarning: The default value of gamma will change from 'auto' to 'scale' in version 0.22 to account better for unscaled features. Set gamma explicitly to 'auto' or 'scale' to avoid this warning.

"avoid this warning.", FutureWarning)

[/code]

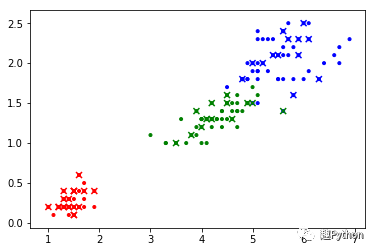

```code

# KNN from sklearn import neighbors

model_fit_show(neighbors.KNeighborsClassifier(), 'neighbors.KNeighborsClassifier', X, y)

(105, 4) (45, 4) (105,) (45,)

[1 2 2 2 0 0 0 1 2 2 1 2 1 1 1 2 0 2 0 0 1 1 0 0 1 0 2 2 0 0 2 2 1 1 0 1 1

0 1 1 2 1 1 0 0]

accuracy of neighbors.KNeighborsClassifier is: 0.9555555555555556

[0]

[/code]

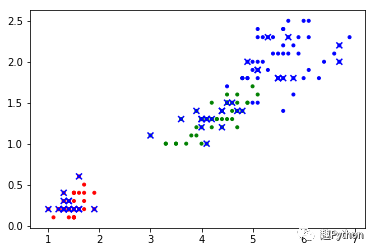

```code

# Kmean from sklearn import cluster

model_fit_show(cluster.KMeans(n_clusters = 3), 'cluster.KMeans', X, y, cluster = True)

(105, 4) (45, 4) (105,) (45,)

[1 2 1 2 2 2 2 2 0 2 2 0 0 2 0 2 0 2 2 1 0 2 1 0 2 2 0 2 0 1 2 2 0 0 2 0 1

1 1 2 0 1 2 1 0]

[2]

[/code]

```code

# naive bayes # https://scikit- learn.org/dev/modules/classes.html#module-sklearn.naive_bayes # 分別是GaussianNB,MultinomialNB和BernoulliNB。 # GaussianNB:先驗為高斯分布的朴素貝葉斯,一般應用於連續值 # MultinomialNB:先驗為多項式分布的朴素貝葉斯,離散多元值分類

# BernoulliNB:先驗為伯努利分布的朴素貝葉斯,離散二值分類 # ComplementNB:對MultinomialNB的補充,適用於非平衡數據 from sklearn import naive_bayes

model_fit_show(naive_bayes.BernoulliNB(), 'naive_bayes.BernoulliNB', X, y) model_fit_show(naive_bayes.GaussianNB(), 'naive_bayes.GaussianNB', X, y) model_fit_show(naive_bayes.MultinomialNB(), 'naive_bayes.MultinomialNB', X, y) model_fit_show(naive_bayes.ComplementNB(), 'naive_bayes.ComplementNB', X, y)

(105, 4) (45, 4) (105,) (45,)

[2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2]

accuracy of naive_bayes.BernoulliNB is: 0.24444444444444444

[2]

[/code]

```code

(105, 4) (45, 4) (105,) (45,)

[1 0 1 0 0 1 0 0 0 0 0 0 1 1 1 2 1 0 1 1 2 0 0 2 2 1 2 0 0 1 0 2 0 1 0 2 1

2 2 2 0 2 0 2 2]

accuracy of naive_bayes.GaussianNB is: 0.9333333333333333

[0]

[/code]

```code

(105, 4) (45, 4) (105,) (45,)

[0 2 2 1 2 1 2 0 2 1 2 0 2 0 1 1 2 1 0 2 2 2 0 1 1 1 0 2 2 1 1 2 1 0 0 1 0

0 1 1 2 2 1 2 1]

accuracy of naive_bayes.MultinomialNB is: 0.9555555555555556

[0]

[/code]

```code

(105, 4) (45, 4) (105,) (45,)

[2 2 2 2 2 2 2 2 2 2 2 0 2 2 2 0 2 2 2 2 0 0 0 2 2 2 0 0 2 2 2 0 2 2 2 0 0

2 0 2 2 2 2 0 2]

accuracy of naive_bayes.ComplementNB is: 0.6

[0]

[/code]

```code

(↓ - 有些內容只在小龍家發,可關注同名“趣Python”號,謝謝 - ↓)