下面是一些USB攝像頭的驅動(大多數攝像頭都支持uvc標准):

1 使用軟件庫里的uvc-camera功能包

1.1 檢查攝像頭

lsusb

-------------------------------------

顯示如下:

Bus 002 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

Bus 001 Device 007: ID 046d:082b Logitech, Inc. Webcam C170

Bus 001 Device 006: ID 0461:4e2a Primax Electronics, Ltd

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

1.2 安裝uvc camera功能包

sudo apt-get install ros-indigo-uvc-camera

1.3 安裝image相關功能包

sudo apt-get install ros-kinetic-image-*

sudo apt-get install ros-kinetic-rqt-image-view

1.4 運行uvc_camera節點

rosrun uvc_camera uvc_camera_node

1.5 查看圖像信息

(1)使用image_view節點查看圖像

rosrun image_view image_view image:=/image_raw

-------------------------------------

說明:最后面的附加選項“image:=/image_raw”是把話題列表中的話題以圖像形式查看的選項。

(2)用rqt_image_view節點檢查

rqt_image_view image:=/image_raw

(3)使用rviz查看

rviz

增加image,然后將[Image] → [Image Topic]的值更改為“/image_raw”。

使用apt-get安裝的軟件包好像只有執行程序,沒有launch文件和節點源文件等等,所以采用了自建uvc-camera軟件包更該參數。

2 使用usb_cam軟件包

2.1 安裝usb_cam軟件包

sudo apt-get install ros-kinetic-usb-cam

2.2 啟用launch文件

roslaunch usb_cam usb_cam-test.launch

-------------------------------------

顯示如下:

launch文件的目錄為:/opt/ros/kinetic/share/usb_cam,可在該目錄下找到luanch文件並修改參數。

3 使用opencv驅動USB攝像頭

首先創建一個工作空間:

$ mkdir -p ~/ros_ws/src

$ cd ~/ros_ws/

$ catkin_make

$ source devel/setup.bash

再建立一個功能包:

$ cd ~/ros_ws/src

$ catkin_create_pkg learning_image_transport roscpp std_msgs cv_bridge image_transport sensor_msgs

然后在功能包learning_image_transport下的src目錄中建立兩個cpp文件:

$ cd ~/ros_ws/src/learning_image_transport/src/

$ gedit my_publisher.cpp

然后在功能包learning_image_transport下的src目錄中建立兩個cpp文件:

$ cd ~/ros_ws/src/learning_image_transport/src/

$ gedit my_publisher.cpp

將下列代碼復制進去:

#include <ros/ros.h>

#include <image_transport/image_transport.h>

#include <opencv2/highgui/highgui.hpp>

#include <cv_bridge/cv_bridge.h>

#include <sstream> // for converting the command line parameter to integer

int main(int argc, char** argv)

{

// Check if video source has been passed as a parameter

if(argv[1] == NULL)

{

ROS_INFO("argv[1]=NULL\n");

return 1;

}

ros::init(argc, argv, "image_publisher");

ros::NodeHandle nh;

image_transport::ImageTransport it(nh);

image_transport::Publisher pub = it.advertise("camera/image", 1);

// Convert the passed as command line parameter index for the video device to an integer

std::istringstream video_sourceCmd(argv[1]);

int video_source;

// Check if it is indeed a number

if(!(video_sourceCmd >> video_source))

{

ROS_INFO("video_sourceCmd is %d\n",video_source);

return 1;

}

cv::VideoCapture cap(video_source);

// Check if video device can be opened with the given index

if(!cap.isOpened())

{

ROS_INFO("can not opencv video device\n");

return 1;

}

cv::Mat frame;

sensor_msgs::ImagePtr msg;

ros::Rate loop_rate(5);

while (nh.ok())

{

cap >> frame;

// Check if grabbed frame is actually full with some content

if(!frame.empty())

{

msg = cv_bridge::CvImage(std_msgs::Header(), "bgr8", frame).toImageMsg();

pub.publish(msg);

//cv::Wait(1);

}

}

ros::spinOnce();

loop_rate.sleep();

}

保存以后,繼續創建my_subscriber.cpp:

$ gedit my_subscriber.cpp

復制下列代碼:

#include <ros/ros.h>

#include <image_transport/image_transport.h>

#include <opencv2/highgui/highgui.hpp>

#include <cv_bridge/cv_bridge.h>

void imageCallback(const sensor_msgs::ImageConstPtr& msg)

{

try

{

cv::imshow("view", cv_bridge::toCvShare(msg, "bgr8")->image);

// cv::waitKey(30);

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("Could not convert from '%s' to 'bgr8'.", msg->encoding.c_str());

}

}

int main(int argc, char **argv)

{

ros::init(argc, argv, "image_listener");

ros::NodeHandle nh;

cv::namedWindow("view");

cv::startWindowThread();

image_transport::ImageTransport it(nh);

image_transport::Subscriber sub = it.subscribe("camera/image", 1,imageCallback);

ros::spin();

cv::destroyWindow("view");

}

接下來要把涉及到的各種包和opencv在CMakeList中聲明一下,回到程序包目錄下。

$ cd ~/ros_ws/src/learning_image_transport/

$ gedit CMakeLists.txt

添加以下語句:

find_package(OpenCV REQUIRED)

# add the publisher example

add_executable(my_publisher src/my_publisher.cpp)

target_link_libraries(my_publisher ${catkin_LIBRARIES} ${OpenCV_LIBRARIES})

# add the subscriber example

add_executable(my_subscriber src/my_subscriber.cpp)

target_link_libraries(my_subscriber ${catkin_LIBRARIES} ${OpenCV_LIBRARIES})

將這個包進行編譯:

$ cd ~/ros_ws/

$ catkin_make

接下來開始運行程序,首先啟動ROS。

$ roscore

運行my_publisher節點.(如果運行不起來,需要先source devel/setup.bash)。

$ rosrun learning_image_transport my_publisher 0

這時候會看到我們的攝像頭燈已經亮起來了,0代表默認攝像頭,如果有多個攝像頭,則第二個是1,依次類推。

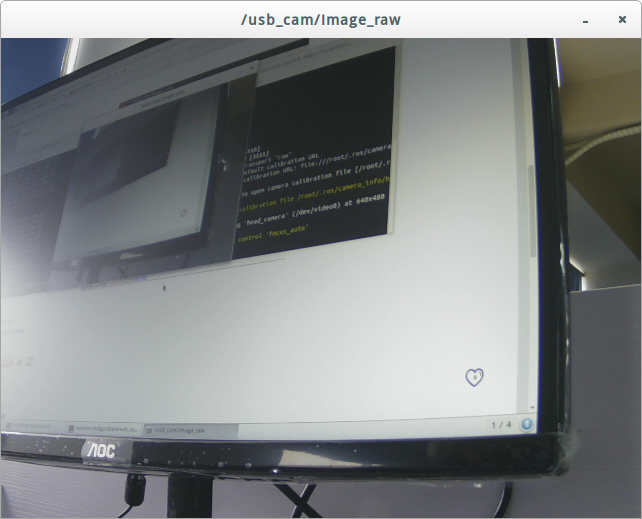

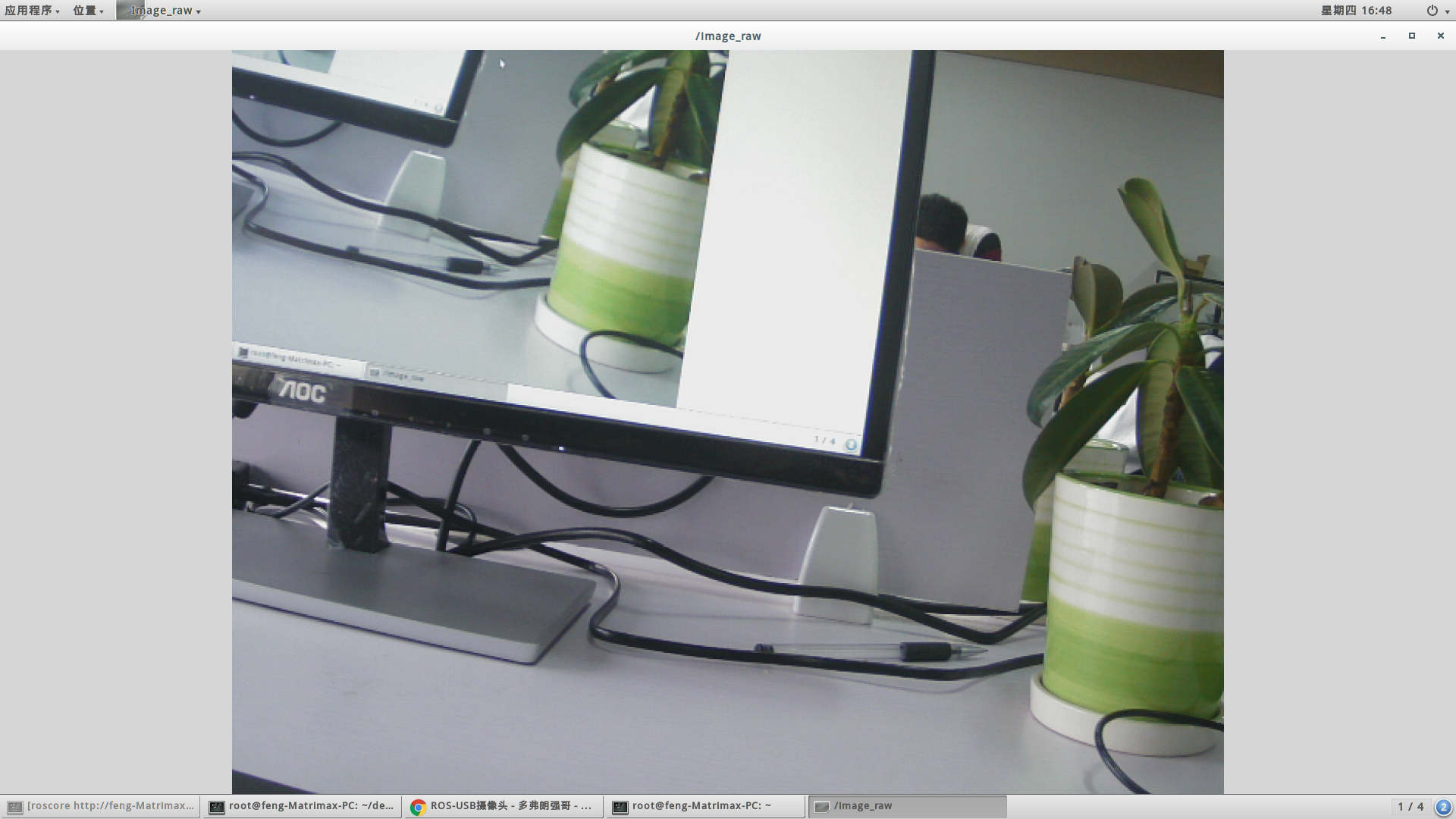

接下來運行my_subscriber節點來接收圖像。

$ rosrun learning_image_transport my_subscriber

這時候如果沒有錯誤的話就會彈出圖像窗口,如下所示:

參考:

ROS學習筆記(一):在ROS中使用USB網絡攝像頭傳輸圖像