更好的閱讀體驗請見博客

前言

看了yolox后發現數據增強是真的nb,但是自己想如何實現的時候就感覺不太行了(不能簡潔的實現)。又一想,數據增強這種trick肯定會用到其他網絡的dataloader里面啊,所以仔細研究了一下代碼復現一下。

最后附上我自己封裝的mosaic和mixup,不自己封裝到時候現copy別人的都不知bug在哪 雖然核心與原論文差不多

Mosaic

源碼分析

下面根據yolox源碼進行分析:

yolox想法是先生成一個Dataset類,然后根據這個類可以進行iterater,故寫了一個pull_item函數。

基於以上,然后可以定義到MosaicDetection類

class MosaicDetection(Dataset):

"""Detection dataset wrapper that performs mixup for normal dataset."""

def __init__(

self, dataset, img_size, mosaic=True, preproc=None,

degrees=10.0, translate=0.1, mosaic_scale=(0.5, 1.5),

mixup_scale=(0.5, 1.5), shear=2.0, perspective=0.0,

enable_mixup=True, mosaic_prob=1.0, mixup_prob=1.0, *args

):

super().__init__(img_size, mosaic=mosaic)

self._dataset = dataset

self.preproc = preproc

self.degrees = degrees

self.translate = translate

self.scale = mosaic_scale

self.shear = shear

self.perspective = perspective

self.mixup_scale = mixup_scale

self.enable_mosaic = mosaic

self.enable_mixup = enable_mixup

self.mosaic_prob = mosaic_prob

self.mixup_prob = mixup_prob

self.local_rank = get_local_rank()

參數含義就不講了,關鍵是self._dataset這個字段,可以看出Mosaic是在原先的Dataset基礎上實現的。

也就是說需要的只是重寫getitem和len,下面開始講解getitem

第一部分 圖片拼接

def __getitem__(self, idx):

if self.enable_mosaic and random.random() < self.mosaic_prob:

mosaic_labels = []

input_dim = self._dataset.input_dim

input_h, input_w = input_dim[0], input_dim[1]

# yc, xc = s, s # mosaic center x, y

# 畫布大小為input_h,input_w

# 拼接公共點位置

yc = int(random.uniform(0.5 * input_h, 1.5 * input_h))

xc = int(random.uniform(0.5 * input_w, 1.5 * input_w))

# 3 additional image indices

indices = [idx] + [random.randint(0, len(self._dataset) - 1) for _ in range(3)]

for i_mosaic, index in enumerate(indices):

img, _labels, _, img_id = self._dataset.pull_item(index)

# 得到的第一張圖片的原始大小

h0, w0 = img.shape[:2]

scale = min(1. * input_h / h0, 1. * input_w / w0)

# 放大到input size

img = cv2.resize(

img, (int(w0 * scale), int(h0 * scale)), interpolation=cv2.INTER_LINEAR

)

# generate output mosaic image

(h, w, c) = img.shape[:3]

# 生成一個新的畫布,顏色是114

if i_mosaic == 0:

mosaic_img = np.full((input_h * 2, input_w * 2, c), 114, dtype=np.uint8)

# suffix l means large image, while s means small image in mosaic aug.

# 根據圖片的先后順序分別放入左上、右上、左下、右下四個方向。

# 函數返回的是基於畫布的新坐標 和 原圖像的坐標(要注意由於0.5-1.5倍,原圖像可能會超出畫布范圍

(l_x1, l_y1, l_x2, l_y2), (s_x1, s_y1, s_x2, s_y2) = get_mosaic_coordinate(

mosaic_img, i_mosaic, xc, yc, w, h, input_h, input_w

)

# 賦值到畫布

mosaic_img[l_y1:l_y2, l_x1:l_x2] = img[s_y1:s_y2, s_x1:s_x2]

plt.imshow(mosaic_img)

plt.show()

# 坐標偏移量

padw, padh = l_x1 - s_x1, l_y1 - s_y1

labels = _labels.copy()

# Normalized xywh to pixel xyxy format

# 個人覺得這個注釋意思有問題(可能我理解錯了?下面細說

# 這是轉換到新坐標軸的坐標

if _labels.size > 0:

# 左上角坐標

labels[:, 0] = scale * _labels[:, 0] + padw

labels[:, 1] = scale * _labels[:, 1] + padh

# 右下

labels[:, 2] = scale * _labels[:, 2] + padw

labels[:, 3] = scale * _labels[:, 3] + padh

mosaic_labels.append(labels)

plt.imshow(mosaic_img)

plt.show()

大概思路是先隨機得到四張圖片,然后創建一個大小為網絡輸入兩倍的input,隨機(0.5-1.5 scale)生成一個mosaic center(簡單理解就是四張圖片的公共點)。之后按照順序拼接到左上、右上、左下、右下四個部分。

當一張圖片放入畫布時,得到x,y的原偏移量(padw,padh),然后計算偏移后的bbox位置。

有個問題是新bbox的坐標,注釋寫的是xywh轉x1 y1 x2 y2,但是個人實現的時候發現輸入是bbox的x1y1x2y2轉換能正確框出,有無評論區大佬說明一下。

第二部分:圖像旋轉與剪切

if len(mosaic_labels):

# 將bbox超出畫布部分變為畫布邊緣

mosaic_labels = np.concatenate(mosaic_labels, 0)

np.clip(mosaic_labels[:, 0], 0, 2 * input_w, out=mosaic_labels[:, 0])

np.clip(mosaic_labels[:, 1], 0, 2 * input_h, out=mosaic_labels[:, 1])

np.clip(mosaic_labels[:, 2], 0, 2 * input_w, out=mosaic_labels[:, 2])

np.clip(mosaic_labels[:, 3], 0, 2 * input_h, out=mosaic_labels[:, 3])

# 順時針旋轉degree°,輸出新的圖像和新的bbox坐標

mosaic_img, mosaic_labels = random_perspective(

mosaic_img,

mosaic_labels,

degrees=self.degrees,

translate=self.translate,

scale=self.scale,

shear=self.shear,

perspective=self.perspective,

border=[-input_h // 2, -input_w // 2],

) # border to remove

這一部分就比較簡單了,先是用clip函數處理好畫布,然后旋轉一個角度,旋轉后bbox坐標變化其實可以不用關心,因為角度很小物體幾乎超不出bbox的范圍。細究旋轉代碼可以自己去看看我不想看了,最后還裁剪成了input size,所以這個最后輸出還是input size而不是2*input size

Mix up

論文mosaic后半部分還增加了mixup(可選,但默認使用

# -----------------------------------------------------------------

# CopyPaste: https://arxiv.org/abs/2012.07177

# -----------------------------------------------------------------

if (

self.enable_mixup

and not len(mosaic_labels) == 0

and random.random() < self.mixup_prob

# 如果mosaic_prob=0.5 mixup_prob=0.5這里0.5*0.5是0.25的概率mixup了

):

mosaic_img, mosaic_labels = self.mixup(mosaic_img, mosaic_labels, self.input_dim)

# 這里還增加了其他的預處理

mix_img, padded_labels = self.preproc(mosaic_img, mosaic_labels, self.input_dim)

img_info = (mix_img.shape[1], mix_img.shape[0])

# -----------------------------------------------------------------

# img_info and img_id are not used for training.

# They are also hard to be specified on a mosaic image.

# -----------------------------------------------------------------

return mix_img, padded_labels, img_info, img_id

else:

# 這個else是和mosaic的if對應的,不mosaic則默認只有預處理

self._dataset._input_dim = self.input_dim

img, label, img_info, img_id = self._dataset.pull_item(idx)

img, label = self.preproc(img, label, self.input_dim)

return img, label, img_info, img_id

# mixup函數

def mixup(self, origin_img, origin_labels, input_dim):

jit_factor = random.uniform(*self.mixup_scale)

# 圖像是否翻轉

FLIP = random.uniform(0, 1) > 0.5

cp_labels = []

# 保證不是背景 load_anno函數不涉及圖像讀取會更快(coco類

while len(cp_labels) == 0:

cp_index = random.randint(0, self.__len__() - 1)

cp_labels = self._dataset.load_anno(cp_index)

# 確定不是背景后再載入img

img, cp_labels, _, _ = self._dataset.pull_item(cp_index)

# 創建畫布

if len(img.shape) == 3:

cp_img = np.ones((input_dim[0], input_dim[1], 3), dtype=np.uint8) * 114

else:

cp_img = np.ones(input_dim, dtype=np.uint8) * 114

# 計算scale

cp_scale_ratio = min(input_dim[0] / img.shape[0], input_dim[1] / img.shape[1])

# resize

resized_img = cv2.resize(

img,

(int(img.shape[1] * cp_scale_ratio), int(img.shape[0] * cp_scale_ratio)),

interpolation=cv2.INTER_LINEAR,

)

# 放入畫布

cp_img[

: int(img.shape[0] * cp_scale_ratio), : int(img.shape[1] * cp_scale_ratio)

] = resized_img

# 畫布放大jit factor倍

cp_img = cv2.resize(

cp_img,

(int(cp_img.shape[1] * jit_factor), int(cp_img.shape[0] * jit_factor)),

)

cp_scale_ratio *= jit_factor

if FLIP:

cp_img = cp_img[:, ::-1, :]

# 以上創建好了一個可以mix up的圖像

# 下面開始mix up

# 創建的畫布向輸入的圖像上面疊加

origin_h, origin_w = cp_img.shape[:2]

target_h, target_w = origin_img.shape[:2]

# 取最大面積然后全部padding 0

padded_img = np.zeros(

(max(origin_h, target_h), max(origin_w, target_w), 3), dtype=np.uint8

)

# 放入新畫布(也只有新畫布

padded_img[:origin_h, :origin_w] = cp_img

# 隨機偏移量

x_offset, y_offset = 0, 0

if padded_img.shape[0] > target_h:

y_offset = random.randint(0, padded_img.shape[0] - target_h - 1)

if padded_img.shape[1] > target_w:

x_offset = random.randint(0, padded_img.shape[1] - target_w - 1)

# 裁剪畫布

padded_cropped_img = padded_img[

y_offset: y_offset + target_h, x_offset: x_offset + target_w

]

# 調整scale后畫布中圖像的bbox坐標

cp_bboxes_origin_np = adjust_box_anns(

cp_labels[:, :4].copy(), cp_scale_ratio, 0, 0, origin_w, origin_h

)

# 是否鏡像翻轉

if FLIP:

cp_bboxes_origin_np[:, 0::2] = (

origin_w - cp_bboxes_origin_np[:, 0::2][:, ::-1]

)

# 調整裁剪后bbox坐標(以裁剪左上角為新的原點

cp_bboxes_transformed_np = cp_bboxes_origin_np.copy()

cp_bboxes_transformed_np[:, 0::2] = np.clip(

cp_bboxes_transformed_np[:, 0::2] - x_offset, 0, target_w

)

cp_bboxes_transformed_np[:, 1::2] = np.clip(

cp_bboxes_transformed_np[:, 1::2] - y_offset, 0, target_h

)

# 通過五個條件判斷offset是否合理,下面細說

keep_list = box_candidates(cp_bboxes_origin_np.T, cp_bboxes_transformed_np.T, 5)

# 滿足條件則合並label和image

if keep_list.sum() >= 1.0:

cls_labels = cp_labels[keep_list, 4:5].copy()

box_labels = cp_bboxes_transformed_np[keep_list]

labels = np.hstack((box_labels, cls_labels))

origin_labels = np.vstack((origin_labels, labels))

origin_img = origin_img.astype(np.float32)

origin_img = 0.5 * origin_img + 0.5 * padded_cropped_img.astype(np.float32)

return origin_img.astype(np.uint8), origin_labels

總體來說比較好理解,因為坐標變換方法和mosaic相同,而最頭疼的就是坐標變換了。

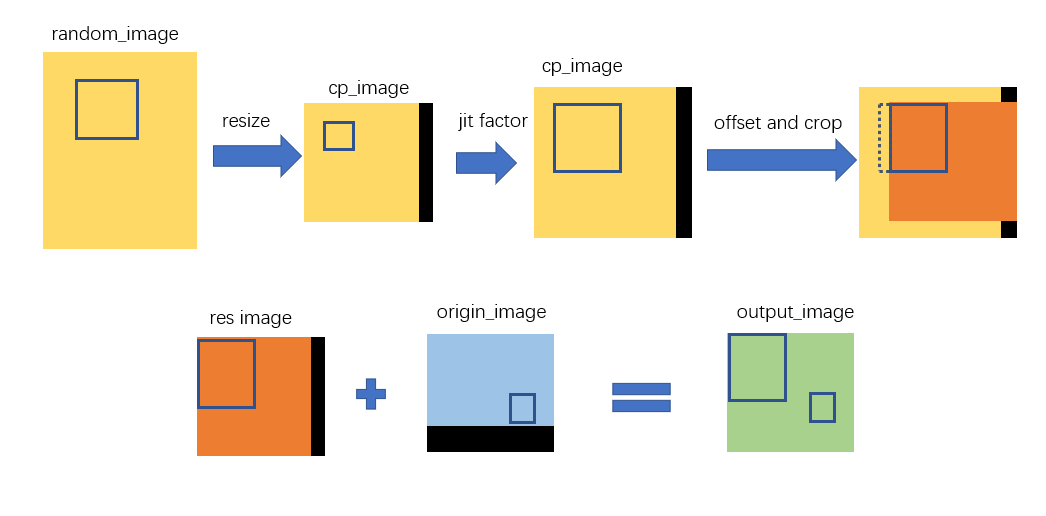

首先隨機出一個非背景圖像(必定有bbox的圖像),然后縮放到input size,再放入input size(比如650*640)大小的畫布。然后畫布整體放大到jit facotr倍,在原圖和新圖中尋找最大的畫布,在大畫布中隨機出裁剪偏移量,裁剪,檢查沒問題后mix up即可。

大致流程如下(省略了尋找最大的畫布過程):

下面講檢查函數box_candidates:

def box_candidates(box1, box2, wh_thr=2, ar_thr=20, area_thr=0.2):

# box1(4,n), box2(4,n)

# Compute candidate boxes which include follwing 5 things:

# box1 before augment, box2 after augment, wh_thr (pixels), aspect_ratio_thr, area_ratio

w1, h1 = box1[2] - box1[0], box1[3] - box1[1]

w2, h2 = box2[2] - box2[0], box2[3] - box2[1]

ar = np.maximum(w2 / (h2 + 1e-16), h2 / (w2 + 1e-16)) # aspect ratio

return (

(w2 > wh_thr)

& (h2 > wh_thr)

& (w2 * h2 / (w1 * h1 + 1e-16) > area_thr)

& (ar < ar_thr)

) # candidates

就是將偏移后的box和偏移前的box進行比較,四項指標分別是偏移后的box寬度,高度,面積,box長寬比

注釋里寫的五個實現只有四個

{% image https://cdn.jsdelivr.net/gh/dummerchen/My_Image_Bed03@image_bed_001/img/20210926215440.png ,alt='最終結果,中間的那兩個是mix up',height=60vh %}

自用代碼

因為yolox等里面肯定是用了各種東西對dataloader加速比如pycoco類封裝(這個包不是很懂)、preload等,一時半會也看不完。只好剝離了,loader的效率估計不會那么高 以后變成大牛了再加吧

# -*- coding:utf-8 -*-

# @Author : Dummerfu

# @Contact : https://github.com/dummerchen

# @Time : 2021/9/25 14:06

import math

from draw_box_utli import draw_box

from torch.utils.data import Dataset

from VocDataset import VocDataSet

import matplotlib as mpl

import random

import cv2

import numpy as np

from matplotlib import pyplot as plt

mpl.rcParams['font.sans-serif'] = 'SimHei'

mpl.rcParams['axes.unicode_minus'] = False

def get_mosaic_coordinate(mosaic_image, mosaic_index, xc, yc, w, h, input_h, input_w):

# TODO update doc

# index0 to top left part of image

if mosaic_index == 0:

x1, y1, x2, y2 = max(xc - w, 0), max(yc - h, 0), xc, yc

small_coord = w - (x2 - x1), h - (y2 - y1), w, h

# index1 to top right part of image

elif mosaic_index == 1:

x1, y1, x2, y2 = xc, max(yc - h, 0), min(xc + w, input_w * 2), yc

small_coord = 0, h - (y2 - y1), min(w, x2 - x1), h

# index2 to bottom left part of image

elif mosaic_index == 2:

x1, y1, x2, y2 = max(xc - w, 0), yc, xc, min(input_h * 2, yc + h)

small_coord = w - (x2 - x1), 0, w, min(y2 - y1, h)

# index2 to bottom right part of image

elif mosaic_index == 3:

x1, y1, x2, y2 = xc, yc, min(xc + w, input_w * 2), min(input_h * 2, yc + h) # noqa

small_coord = 0, 0, min(w, x2 - x1), min(y2 - y1, h)

return (x1, y1, x2, y2), small_coord

def random_perspective(

img,

targets=(),

degrees=10,

translate=0.1,

scale=0.1,

shear=10,

perspective=0.0,

border=(0, 0),

):

# targets = [cls, xyxy]

height = img.shape[0] + border[0] * 2 # shape(h,w,c)

width = img.shape[1] + border[1] * 2

# Center

C = np.eye(3)

C[0, 2] = -img.shape[1] / 2 # x translation (pixels)

C[1, 2] = -img.shape[0] / 2 # y translation (pixels)

# Rotation and Scale

R = np.eye(3)

a = random.uniform(-degrees, degrees)

# a += random.choice([-180, -90, 0, 90]) # add 90deg rotations to small rotations

s = random.uniform(scale[0], scale[1])

# s = 2 ** random.uniform(-scale, scale)

R[:2] = cv2.getRotationMatrix2D(angle=a, center=(0, 0), scale=s)

# Shear

S = np.eye(3)

S[0, 1] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # x shear (deg)

S[1, 0] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # y shear (deg)

# Translation

T = np.eye(3)

T[0, 2] = (

random.uniform(0.5 - translate, 0.5 + translate) * width

) # x translation (pixels)

T[1, 2] = (

random.uniform(0.5 - translate, 0.5 + translate) * height

) # y translation (pixels)

# Combined rotation matrix

M = T @ S @ R @ C # order of operations (right to left) is IMPORTANT

###########################

# For Aug out of Mosaic

# s = 1.

# M = np.eye(3)

###########################

if (border[0] != 0) or (border[1] != 0) or (M != np.eye(3)).any(): # image changed

if perspective:

img = cv2.warpPerspective(

img, M, dsize=(width, height), borderValue=(114, 114, 114)

)

else: # affine

img = cv2.warpAffine(

img, M[:2], dsize=(width, height), borderValue=(114, 114, 114)

)

# Transform label coordinates

n = len(targets)

if n:

# warp points

xy = np.ones((n * 4, 3))

xy[:, :2] = targets[:, [0, 1, 2, 3, 0, 3, 2, 1]].reshape(

n * 4, 2

) # x1y1, x2y2, x1y2, x2y1

xy = xy @ M.T # transform

if perspective:

xy = (xy[:, :2] / xy[:, 2:3]).reshape(n, 8) # rescale

else: # affine

xy = xy[:, :2].reshape(n, 8)

# create new boxes

x = xy[:, [0, 2, 4, 6]]

y = xy[:, [1, 3, 5, 7]]

xy = np.concatenate((x.min(1), y.min(1), x.max(1), y.max(1))).reshape(4, n).T

# clip boxes

xy[:, [0, 2]] = xy[:, [0, 2]].clip(0, width)

xy[:, [1, 3]] = xy[:, [1, 3]].clip(0, height)

# filter candidates

i = box_candidates(box1=targets[:, :4].T * s, box2=xy.T)

targets = targets[i]

targets[:, :4] = xy[i]

return img, targets

def box_candidates(box1, box2, wh_thr=2, ar_thr=20, area_thr=0.2):

# box1(4,n), box2(4,n)

# Compute candidate boxes which include follwing 5 things:

# box1 before augment, box2 after augment, wh_thr (pixels), aspect_ratio_thr, area_ratio

w1, h1 = box1[2] - box1[0], box1[3] - box1[1]

w2, h2 = box2[2] - box2[0], box2[3] - box2[1]

ar = np.maximum(w2 / (h2 + 1e-16), h2 / (w2 + 1e-16)) # aspect ratio

return (

(w2 > wh_thr)

& (h2 > wh_thr)

& (w2 * h2 / (w1 * h1 + 1e-16) > area_thr)

& (ar < ar_thr)

) # candidates

def adjust_box_anns(bbox, scale_ratio, padw, padh, w_max, h_max):

bbox[:, 0::2] = np.clip(bbox[:, 0::2] * scale_ratio + padw, 0, w_max)

bbox[:, 1::2] = np.clip(bbox[:, 1::2] * scale_ratio + padh, 0, h_max)

return bbox

class MasaicDataset(Dataset):

def __init__(

self, dataset, input_size=(640,640),mosaic=True, preproc=None,

degrees=10.0, translate=0.1, mosaic_scale=(0.5, 1.5),

mixup_scale=(0.5, 1.5), shear=2.0, perspective=0.0,

enable_mixup=True, mosaic_prob=1.0, mixup_prob=1.0, *args

):

"""

Args:

dataset(Dataset) : Pytorch dataset object.

img_size (tuple):

mosaic (bool): enable mosaic augmentation or not.

preproc (func):

degrees (float):

translate (float):

mosaic_scale (tuple):

mixup_scale (tuple):

shear (float):

perspective (float):

enable_mixup (bool):

*args(tuple) : Additional arguments for mixup random sampler.

"""

self._dataset = dataset

self.input_dim=input_size

self.preproc = preproc

self.degrees = degrees

self.translate = translate

self.scale = mosaic_scale

self.shear = shear

self.perspective = perspective

self.mixup_scale = mixup_scale

self.enable_mosaic = mosaic

self.enable_mixup = enable_mixup

self.mosaic_prob = mosaic_prob

self.mixup_prob = mixup_prob

def __len__(self):

return len(self._dataset)

def __getitem__(self, idx):

if self.enable_mosaic and random.random() < self.mosaic_prob:

mosaic_labels = []

input_h, input_w = self.input_dim[0], self.input_dim[1]

# input_h,input_w=2600,4624

# yc, xc = s, s # mosaic center x, y

# 畫布大小為input_h,input_w

# yc = int(random.uniform(0.5 * input_h, 1.5 * input_h))

# xc = int(random.uniform(0.5 * input_w, 1.5 * input_w))

yc=640

xc=640

# 3 additional image indices

indices = [idx] + [random.randint(0, len(self._dataset) - 1) for _ in range(3)]

for i_mosaic, index in enumerate(indices):

img, target = self._dataset.pull_item(index)

_labels=target['labels']

h0, w0 = target['image_info'] # orig hw

scale = min(1. * input_h / h0, 1. * input_w / w0)

# img 放大到input size

img = cv2.resize(

img, (int(w0 * scale), int(h0 * scale)), interpolation=cv2.INTER_LINEAR

)

# generate output mosaic image

(h, w, c) = img.shape[:3]

# draw_box(

# img, _labels[:, :4],

# classes=_labels[:, -1],

# category_index=self._dataset.name2num,

# scores=np.ones(shape=(len(_labels[:, -1]))),

# thresh=0

# )

if i_mosaic == 0:

mosaic_img = np.full((input_h * 2, input_w * 2, c), 114, dtype=np.uint8)

# suffix l means large image, while s means small image in mosaic aug.

(l_x1, l_y1, l_x2, l_y2), (s_x1, s_y1, s_x2, s_y2) = get_mosaic_coordinate(

mosaic_img, i_mosaic, xc, yc, w, h, input_h, input_w

)

mosaic_img[l_y1:l_y2, l_x1:l_x2] = img[s_y1:s_y2, s_x1:s_x2]

padw, padh = l_x1 - s_x1, l_y1 - s_y1

labels = _labels.copy()

# Normalized xywh to pixel xyxy format

if _labels.size > 0:

labels[:, 0] = scale * _labels[:, 0] + padw

labels[:, 1] = scale * _labels[:, 1] + padh

labels[:, 2] = scale * _labels[:, 2] + padw

labels[:, 3] = scale * _labels[:, 3] + padh

mosaic_labels.append(labels)

if len(mosaic_labels):

mosaic_labels = np.concatenate(mosaic_labels, 0)

np.clip(mosaic_labels[:, 0], 0, 2 * input_w, out=mosaic_labels[:, 0])

np.clip(mosaic_labels[:, 1], 0, 2 * input_h, out=mosaic_labels[:, 1])

np.clip(mosaic_labels[:, 2], 0, 2 * input_w, out=mosaic_labels[:, 2])

np.clip(mosaic_labels[:, 3], 0, 2 * input_h, out=mosaic_labels[:, 3])

mosaic_img, mosaic_labels = random_perspective(

mosaic_img,

mosaic_labels,

degrees=self.degrees,

translate=self.translate,

scale=self.scale,

shear=self.shear,

perspective=self.perspective,

border=[-input_h // 2, -input_w // 2],

) # border to remove

# -----------------------------------------------------------------

# CopyPaste: https://arxiv.org/abs/2012.07177

# -----------------------------------------------------------------

if (

self.enable_mixup

and not len(mosaic_labels) == 0

and random.random() < self.mixup_prob

):

mosaic_img, mosaic_labels = self.mixup(mosaic_img, mosaic_labels, self.input_dim)

# mix_img, padded_labels = self.preproc(mosaic_img, mosaic_labels, self.input_dim)

img_info = (mosaic_img.shape[1], mosaic_img.shape[0])

draw_box(

mosaic_img, mosaic_labels[:, :4],

classes=mosaic_labels[:, -1],

category_index=self._dataset.num2name,

scores=np.ones(shape=(len(mosaic_labels[:, -1]))),

thresh=0

)

# 想怎么輸出怎么輸出

return mosaic_img, mosaic_labels,img_info

else:

img, target = self._dataset.pull_item(idx)

# img, label = self.preproc(img, label, self.input_dim)

return img, target

def mixup(self, origin_img, origin_labels, input_dim):

jit_factor = random.uniform(*self.mixup_scale)

FLIP = random.uniform(0, 1) > 0.5

cp_labels = []

img=None

while len(cp_labels) == 0:

cp_index = random.randint(0, self.__len__() - 1)

img,target = self._dataset.pull_item(cp_index)

cp_labels=target['labels']

draw_box(img,cp_labels[:,:4],cp_labels[:,-1],self._dataset.num2name,scores=np.ones(len(cp_labels[:,-1])))

if len(img.shape) == 3:

cp_img = np.ones((input_dim[0], input_dim[1], 3), dtype=np.uint8) * 114

else:

cp_img = np.ones(input_dim, dtype=np.uint8) * 114

cp_scale_ratio = min(input_dim[0] / img.shape[0], input_dim[1] / img.shape[1])

resized_img = cv2.resize(

img,

(int(img.shape[1] * cp_scale_ratio), int(img.shape[0] * cp_scale_ratio)),

interpolation=cv2.INTER_LINEAR,

)

cp_img[

: int(img.shape[0] * cp_scale_ratio), : int(img.shape[1] * cp_scale_ratio)

] = resized_img

cp_img = cv2.resize(

cp_img,

(int(cp_img.shape[1] * jit_factor), int(cp_img.shape[0] * jit_factor)),

)

cp_scale_ratio *= jit_factor

if FLIP:

cp_img = cp_img[:, ::-1, :]

origin_h, origin_w = cp_img.shape[:2]

target_h, target_w = origin_img.shape[:2]

padded_img = np.zeros(

(max(origin_h, target_h), max(origin_w, target_w), 3), dtype=np.uint8

)

padded_img[:origin_h, :origin_w] = cp_img

x_offset, y_offset = 0, 0

if padded_img.shape[0] > target_h:

y_offset = random.randint(0, padded_img.shape[0] - target_h - 1)

if padded_img.shape[1] > target_w:

x_offset = random.randint(0, padded_img.shape[1] - target_w - 1)

padded_cropped_img = padded_img[

y_offset: y_offset + target_h, x_offset: x_offset + target_w

]

cp_bboxes_origin_np = adjust_box_anns(

cp_labels[:, :4].copy(), cp_scale_ratio, 0, 0, origin_w, origin_h

)

if FLIP:

cp_bboxes_origin_np[:, 0::2] = (

origin_w - cp_bboxes_origin_np[:, 0::2][:, ::-1]

)

cp_bboxes_transformed_np = cp_bboxes_origin_np.copy()

cp_bboxes_transformed_np[:, 0::2] = np.clip(

cp_bboxes_transformed_np[:, 0::2] - x_offset, 0, target_w

)

cp_bboxes_transformed_np[:, 1::2] = np.clip(

cp_bboxes_transformed_np[:, 1::2] - y_offset, 0, target_h

)

keep_list = box_candidates(cp_bboxes_origin_np.T, cp_bboxes_transformed_np.T, 5)

if keep_list.sum() >= 1.0:

cls_labels = cp_labels[keep_list, 4:5].copy()

box_labels = cp_bboxes_transformed_np[keep_list]

labels = np.hstack((box_labels, cls_labels))

origin_labels = np.vstack((origin_labels, labels))

origin_img = origin_img.astype(np.float32)

origin_img = 0.5 * origin_img + 0.5 * padded_cropped_img.astype(np.float32)

return origin_img.astype(np.uint8), origin_labels

if __name__ == '__main__':

pass

# vocdataset=VocDataSet(voc_root=r'E:\py_exercise\Dataset\pear_dataset\voc',)

vocdataset=VocDataSet(

voc_root=r'E:\py_exercise\deep-learning-for-image-processing\pytorch_object_detection\faster_rcnn\taboca\Tobacco',

image_folder_name='JPEGImages'

)

dataset=MasaicDataset(

dataset=vocdataset,

)

next(iter(dataset))